The 80s called; they want their memory manager back!

Not too long ago I was presenting on the subject of iPhone development and Objective-C at the Stackoverflow conference. As I wrote at the time, the audience was of predominantly .Net developers and the twitter backchat was filled with a whole range of reactions. Actually there were two themes to that reaction. One was to the syntax of Objective-C which is certainly very different to most C-family languages. The other was to the fact that Objective-C for the iPhone is not garbage collected (although it is on the Mac these days). My favourite comment on twitter was the title of this post.[*]

Much has been said from both sides about whether the iPhone should support garbage collection or not and I won't go into the debate here, other than to say that I think there are valid reasons for it being that way - but that's not to say that it couldn't be made to work (it would be nice if it was at least optional).

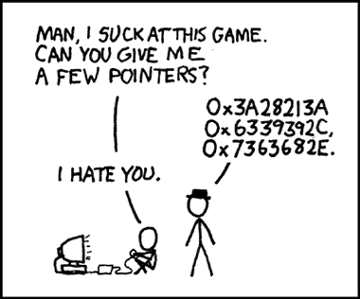

A few pointers

As a grizzled C++ developer in past (and, as it happens, present) lives I'm not fazed by the sight of a raw pointer or the need to count references. That said I struggle to remember the last time I had a memory leak, a dangling pointer or a double-deletion. Is that because I'm an awesome C++ demigod who eats managed programmers for breakfast? No! Not just that! It's also because I use smart pointers.

Smart pointers make use of a feature of C++ that is disappointingly rare in modern programming languages (and often seen as incompatible with garbage collection): deterministic destruction. Put simply when a value goes out of scope it is destroyed there and then. There is no waiting for a garbage collector to kick in at some undetermined, and indeterminate, time to clean up after you. Then why does C++ have the delete keyword? Well I said when a value goes out of scope. If you allocate an object on the heap, the value you have in scope is the pointer to the object. C++ also allows you to create objects on the stack, and these are destroyed at the end of the scope in which they were created (there is a third case where an object is declared by value as a member of another object that lives on the heap - but I'll ignore that for simplicity). When that object is an instance of a class the developer has defined they can supply a destructor that is run when the object is destroyed. Often this is used to delete memory owned by the object - but it could be used to clean up any resources the object is managing, such as file handles, database collections etc. Smart pointers manage objects on the heap. Depending on the type of smart pointer they may simply delete an object in their destructor - or they may offer shared ownership semantics by managing a reference count - so destructors decrement the ref count and only delete when the count reaches zero.

I've crammed an overview of a rich and incredible powerful language feature into a single paragraph above. I haven't even touched on how smart pointers use operator overloading to look like pointers. You can read more details elsewhere. My point is that, because of smart pointers - made possible by deterministic destruction (or more generally an idiom unfortunately known as RAII) - garbage collection is not really missed in C++.

Using {

All of which is the long winded setup to my story for this post. The last couple of days I have been involved with tracking down an issue in a .Net app. I say "involved with" because there were three of us looking at this one problem. Three developers, with more than half a century of professional development experience between us, all on the critical path, tracking down this one problem. In .Net. The problem turned out to be with garbage collection. Actually there was more than one problem.

Is that a jagged array in your pocket?

Now this is not about knocking garbage collection. Or .Net. This is about how things are not always as they appear. In fact that's exactly what the first issue was. We have C++/CLI classes that are really just thin proxy wrappers for native C++ objects. The C++ objects are often multi-dimensional arrays and can get quite big. The problem was that the "payload" of these objects was effectively invisible to .Net. As far as it was concerned it was dealing with thousands of tiny objects. The result was that, when called from C#, it was happily creating more and more of these without garbage collecting the large number of, now unreferenced, older objects!

Actually we had anticipated this state of affairs and had made sure that all these proxy objects were disposable. This meant that in C# we could wrap the usage in a using block and the objects would be disposed of at the end of the using scope. The problem with this is that there is no way to enforce the use of using. By contrast: in C++ if you implement clean-up code in a destructor it will always be called at the end of scope (ok, if you create it on the heap there is no "end of scope" and you're back to square one. I'd argue that you have to make more of an effort to do this, however - and there are also tricks you can use to detect if).

As it happens using is not really the right tool here anyway. The vast majority of these objects, whether boxed primitives (we have a Variant type) or arrays, are semantically just values. Using using with "value" types is clumsy and often impractical. What we would really like is a way to tell the GC that these proxies have a native payload - and have it take that into consideration when deciding when and what to collect. Of course we have just such a facility: GC.AddMemoryPressure() and GC.RemoveMemoryPressure(). These methods allow you to tell .Net that a particular managed object also refers to unmanaged memory of a particular size (in fact you can incrementally add and remove "pressure"). Sounds like just what we need.

Unfortunately there is more to it. First we need to know what "pressure" to apply. If the data is immutable and fully populated by the time the proxy sees it then that is one thing. But if data can be added and removed from both managed and native sides it becomes more tricky. To do it properly we'd have to add hooks deeper into the native array objects so that we know when to apply more or less pressure. Furthermore many of our arrays are really jagged arrays, implemented in terms of Variants containing Variant arrays of more Variants of Variant arrays (if that sounds appalling you probably haven't worked in a bank. Or maybe you have). How can we keep track of the memory being consumed by such structures? Well it helps to keep in mind that we don't really need to know the exact number of bytes. As long as we can give the GC enough hints that it could bucket sizes into, say, orders of magnitude (I don't know exactly how the GC builds its collection strategies and I don't want to second guess it. I'd imagine even this is more granularity than it needs most of the time, but it seems a reasonable level of accuracy to strive for). Fortunately I already have code that can walk these structures and come back with effective fixed array dimensions, so multiplying that by the size of a Variant should get us well into the ballpark we need.

A weak solution

So that was one problem (with several sub-problems). The other was that a certain set of these objects were being tracked in a static Dictionary in the proxy infrastructure. Setting aside, for a moment, the horrors of globals, statics and (shudder) singletons, let's assume that there were valid reasons for needing to do this. The problem here was that the references in the dictionary were naturally keeping the objects alive beyond their useful lifetime! In a way this was a memory leak! Yes you can get memory leaks in garbage collected languages. Of course what we should have been doing (and now are doing) is to hold weak references in the static dictionary. I'd guess, however, that many .Net developers are not even aware of WeakReference. Why should they be? Managed Code is supposed to, well, "manage" your "code" isn't it? Anyway, not surprisingly switching to weak references here solved that problem (and somewhat more easily than the other one).

I'll say again - I'm not having a dig at .Net developers - or anyone else who primarily targets "managed" languages these days. I've used them for years myself. My point is that there is a lot more to it and you don't have to go too far to hit the pathological cases. And when you do hit them things get tricky pretty fast. In addition to the fundamental problems discussed above I spent a lot of time profiling and experimenting with different frequencies and placements of calls to GC.Collect() and GC.WaitForPendingFinalizers(). None of these things were necessary in the pure C++ code (admittedly they are not often necessary in pure C# code either) but when they are it can be very confusing if you're not prepared

}

phew!

Now I started out talking about Objective-C but have ended up talking mostly about C++ and C# (although the lessons have been more general). To bring us back to Objective-C, though, where does it fit in to all this?

Clearly destructive

Well on the iPhone Objective-C lacks garbage collection, as we already noted. It also lacks deterministic destruction (or destructors as a language concept at all). So does that leave us in the Wild West of totally manual C-style memory management? Not quite. Probably the biggest issue with memory management in C is not that you have to do it manually. Personally I think a bigger issue is a lack of universally agreed conventions for ownership. If a function allocates some memory who owns that memory? The caller? Maybe - except when they don't! Some libraries, and most frameworks, establish their own conventions for these things - which is fine. But they're not all the same conventions. So not only is it not always immediately obvious if you are supposed to clean-up some memory - but the confusion makes it less likely that you will remember to at all (because, sub-consciously you're putting off the need to work it out for as long as possible).

Objective-C, at least in Apple's world, doesn't have this problem. There is a very simple, clear and concise set of rules for ownership that are universally adopted:

If a function or method begins with alloc or new, or has the word copy in it, or you explicitly call retain - the caller owns it. Otherwise they don't.

That's it! There are some extra wrinkles, mostly to do with autorelease, but they still follow those rules.

Other than some circular references I had once, and quickly resolved, I don't recall having any problems with memory management in Objective-C for a long time either

Epilogue

There. I've discussed memory management in four languages in a single post - and not mentioned the iPad once! As it happens you can use all four languages to write for the iPhone or iPad. So take your pick of memory management trade-offs.

[*] I tried to track down who tweeted "The 80s called; they want their memory manager back" during Stackoverflow Devdays. As it's difficult to search Twitter very far into the past I couldn't find the original tweet - but thanks to a retweet by Nick Sertis it appears that it was J. Mark Pim. Thanks J.